#embedded intelligence

Explore tagged Tumblr posts

Text

https://www.futureelectronics.com/p/semiconductors--Led-lighting-components--led-driver-modules-rev--constant-voltage-acdc-led-drivers/vlm100w-24-erp-power-6095001

LED driver power electronics, Dimmable led driver, embedded intelligence

100 - 277Vac, 96W, 24V, IP20 LED Driver

#ERP Power#VLM100W-24#Constant Voltage AC/DC LED Drivers#power electronics#Dimmable led driver#embedded intelligence#Lighting Components#led-supplies#circuit#voltage power supply#extensive dimmer compatibility#high efficiency LED driver

2 notes

·

View notes

Text

The global embedded intelligence market is expected to grow significantly, reaching a market value of US$ 861,356.7 million by the year 2033. This substantial increase comes from a base value of US$ 28,057.6 million in 2023. The market is projected to expand at a CAGR of 11.2% during the forecast period.

0 notes

Text

AI Doesn’t Necessarily Give Better Answers If You’re Polite

New Post has been published on https://thedigitalinsider.com/ai-doesnt-necessarily-give-better-answers-if-youre-polite/

AI Doesn’t Necessarily Give Better Answers If You’re Polite

Public opinion on whether it pays to be polite to AI shifts almost as often as the latest verdict on coffee or red wine – celebrated one month, challenged the next. Even so, a growing number of users now add ‘please’ or ‘thank you’ to their prompts, not just out of habit, or concern that brusque exchanges might carry over into real life, but from a belief that courtesy leads to better and more productive results from AI.

This assumption has circulated between both users and researchers, with prompt-phrasing studied in research circles as a tool for alignment, safety, and tone control, even as user habits reinforce and reshape those expectations.

For instance, a 2024 study from Japan found that prompt politeness can change how large language models behave, testing GPT-3.5, GPT-4, PaLM-2, and Claude-2 on English, Chinese, and Japanese tasks, and rewriting each prompt at three politeness levels. The authors of that work observed that ‘blunt’ or ‘rude’ wording led to lower factual accuracy and shorter answers, while moderately polite requests produced clearer explanations and fewer refusals.

Additionally, Microsoft recommends a polite tone with Co-Pilot, from a performance rather than a cultural standpoint.

However, a new research paper from George Washington University challenges this increasingly popular idea, presenting a mathematical framework that predicts when a large language model’s output will ‘collapse’, transiting from coherent to misleading or even dangerous content. Within that context, the authors contend that being polite does not meaningfully delay or prevent this ‘collapse’.

Tipping Off

The researchers argue that polite language usage is generally unrelated to the main topic of a prompt, and therefore does not meaningfully affect the model’s focus. To support this, they present a detailed formulation of how a single attention head updates its internal direction as it processes each new token, ostensibly demonstrating that the model’s behavior is shaped by the cumulative influence of content-bearing tokens.

As a result, polite language is posited to have little bearing on when the model’s output begins to degrade. What determines the tipping point, the paper states, is the overall alignment of meaningful tokens with either good or bad output paths – not the presence of socially courteous language.

An illustration of a simplified attention head generating a sequence from a user prompt. The model starts with good tokens (G), then hits a tipping point (n*) where output flips to bad tokens (B). Polite terms in the prompt (P₁, P₂, etc.) play no role in this shift, supporting the paper’s claim that courtesy has little impact on model behavior. Source: https://arxiv.org/pdf/2504.20980

If true, this result contradicts both popular belief and perhaps even the implicit logic of instruction tuning, which assumes that the phrasing of a prompt affects a model’s interpretation of user intent.

Hulking Out

The paper examines how the model’s internal context vector (its evolving compass for token selection) shifts during generation. With each token, this vector updates directionally, and the next token is chosen based on which candidate aligns most closely with it.

When the prompt steers toward well-formed content, the model’s responses remain stable and accurate; but over time, this directional pull can reverse, steering the model toward outputs that are increasingly off-topic, incorrect, or internally inconsistent.

The tipping point for this transition (which the authors define mathematically as iteration n*), occurs when the context vector becomes more aligned with a ‘bad’ output vector than with a ‘good’ one. At that stage, each new token pushes the model further along the wrong path, reinforcing a pattern of increasingly flawed or misleading output.

The tipping point n* is calculated by finding the moment when the model’s internal direction aligns equally with both good and bad types of output. The geometry of the embedding space, shaped by both the training corpus and the user prompt, determines how quickly this crossover occurs:

An illustration depicting how the tipping point n* emerges within the authors’ simplified model. The geometric setup (a) defines the key vectors involved in predicting when output flips from good to bad. In (b), the authors plot those vectors using test parameters, while (c) compares the predicted tipping point to the simulated result. The match is exact, supporting the researchers’ claim that the collapse is mathematically inevitable once internal dynamics cross a threshold.

Polite terms don’t influence the model’s choice between good and bad outputs because, according to the authors, they aren’t meaningfully connected to the main subject of the prompt. Instead, they end up in parts of the model’s internal space that have little to do with what the model is actually deciding.

When such terms are added to a prompt, they increase the number of vectors the model considers, but not in a way that shifts the attention trajectory. As a result, the politeness terms act like statistical noise: present, but inert, and leaving the tipping point n* unchanged.

The authors state:

‘[Whether] our AI’s response will go rogue depends on our LLM’s training that provides the token embeddings, and the substantive tokens in our prompt – not whether we have been polite to it or not.’

The model used in the new work is intentionally narrow, focusing on a single attention head with linear token dynamics – a simplified setup where each new token updates the internal state through direct vector addition, without non-linear transformations or gating.

This simplified setup lets the authors work out exact results and gives them a clear geometric picture of how and when a model’s output can suddenly shift from good to bad. In their tests, the formula they derive for predicting that shift matches what the model actually does.

Chatting Up..?

However, this level of precision only works because the model is kept deliberately simple. While the authors concede that their conclusions should later be tested on more complex multi-head models such as the Claude and ChatGPT series, they also believe that the theory remains replicable as attention heads increase, stating*:

‘The question of what additional phenomena arise as the number of linked Attention heads and layers is scaled up, is a fascinating one. But any transitions within a single Attention head will still occur, and could get amplified and/or synchronized by the couplings – like a chain of connected people getting dragged over a cliff when one falls.’

An illustration of how the predicted tipping point n* changes depending on how strongly the prompt leans toward good or bad content. The surface comes from the authors’ approximate formula and shows that polite terms, which don’t clearly support either side, have little effect on when the collapse happens. The marked value (n* = 10) matches earlier simulations, supporting the model’s internal logic.

What remains unclear is whether the same mechanism survives the jump to modern transformer architectures. Multi-head attention introduces interactions across specialized heads, which may buffer against or mask the kind of tipping behavior described.

The authors acknowledge this complexity, but argue that attention heads are often loosely-coupled, and that the sort of internal collapse they model could be reinforced rather than suppressed in full-scale systems.

Without an extension of the model or an empirical test across production LLMs, the claim remains unverified. However, the mechanism seems sufficiently precise to support follow-on research initiatives, and the authors provide a clear opportunity to challenge or confirm the theory at scale.

Signing Off

At the moment, the topic of politeness towards consumer-facing LLMs appears to be approached either from the (pragmatic) standpoint that trained systems may respond more usefully to polite inquiry; or that a tactless and blunt communication style with such systems risks to spread into the user’s real social relationships, through force of habit.

Arguably, LLMs have not yet been used widely enough in real-world social contexts for the research literature to confirm the latter case; but the new paper does cast some interesting doubt upon the benefits of anthropomorphizing AI systems of this type.

A study last October from Stanford suggested (in contrast to a 2020 study) that treating LLMs as if they were human additionally risks to degrade the meaning of language, concluding that ‘rote’ politeness eventually loses its original social meaning:

[A] statement that seems friendly or genuine from a human speaker can be undesirable if it arises from an AI system since the latter lacks meaningful commitment or intent behind the statement, thus rendering the statement hollow and deceptive.’

However, roughly 67 percent of Americans say they are courteous to their AI chatbots, according to a 2025 survey from Future Publishing. Most said it was simply ‘the right thing to do’, while 12 percent confessed they were being cautious – just in case the machines ever rise up.

* My conversion of the authors’ inline citations to hyperlinks. To an extent, the hyperlinks are arbitrary/exemplary, since the authors at certain points link to a wide range of footnote citations, rather than to a specific publication.

First published Wednesday, April 30, 2025. Amended Wednesday, April 30, 2025 15:29:00, for formatting.

#2024#2025#ADD#Advanced LLMs#ai#AI chatbots#AI systems#Anderson's Angle#Artificial Intelligence#attention#bearing#Behavior#challenge#change#chatbots#chatGPT#circles#claude#coffee#communication#compass#complexity#content#Delay#direction#dynamics#embeddings#English#extension#focus

2 notes

·

View notes

Text

AI Math Agents

We know that we are in an AI take-off, what is new is that we are in a math take-off. A math take-off is using math as a formal language, beyond the human-facing math-as-math use case, for AI to interface with the computational infrastructure. The message of generative AI and LLMs (large language models like GPT) is not that they speak natural language to humans, but that they speak formal languages (programmatic code, mathematics, physics) to the computational infrastructure, implying the ability to create a much larger problem-solving apparatus for humanity-benefitting applications in biology, energy, and space science, however not without risk.

2 notes

·

View notes

Text

# Draft Policy: Intelligence Framework for Detecting and Countering Embedded Corrupt Actors

---

### **Policy Number:** \[Insert ID]

### **Effective Date:** \[Insert Date]

### **Review Cycle:** Annual or as required

### **Approved by:** \[Approving Authority]

---

## 1. **Purpose**

This policy establishes the framework for the systematic collection, analysis, and dissemination of intelligence related to embedded corrupt actors within organizations, governments, and military entities who may engage in sabotage or clandestine relocation activities to Earth upon imminent detection.

---

## 2. **Scope**

This policy applies to all personnel, departments, and units involved in intelligence, counterintelligence, cybersecurity, and security operations across \[Organization/Agency]. It governs the methods and tools used to detect, monitor, and respond to insider threats and related clandestine activities.

---

## 3. **Objectives**

* Identify and mitigate risks posed by embedded corrupt actors with timely, actionable intelligence.

* Maintain continuous situational awareness of potential sabotage and clandestine relocation attempts.

* Integrate multi-domain intelligence sources and advanced analytics to detect covert threats.

* Protect organizational assets, personnel, and strategic interests from insider-enabled attacks.

* Facilitate coordinated responses with partner agencies and allied entities.

---

## 4. **Intelligence Gathering Principles**

### 4.1. **Multi-Domain Collection**

* Employ human intelligence (HUMINT), signals intelligence (SIGINT), cyber intelligence, geospatial intelligence (GEOINT), open-source intelligence (OSINT), and sensor data.

* Prioritize the integration and fusion of intelligence from diverse sources to maximize detection capability.

### 4.2. **Continuous Monitoring**

* Maintain ongoing surveillance of personnel behavior, communications, network activity, and geospatial indicators for anomalies.

* Use AI-driven tools for real-time detection of suspicious patterns and insider threat indicators.

### 4.3. **Legal and Ethical Compliance**

* Ensure all intelligence activities comply with applicable laws, regulations, and organizational ethical standards.

* Respect privacy rights and balance security needs with individual freedoms.

---

## 5. **Roles and Responsibilities**

| Role | Responsibilities |

| ------------------------------ | ----------------------------------------------------------------------------- |

| **Chief Intelligence Officer** | Overall oversight of intelligence operations and policy compliance. |

| **Counterintelligence Units** | Conduct insider threat investigations and vetting programs. |

| **Cybersecurity Teams** | Monitor and respond to cyber threats and AI integrity attacks. |

| **AI/Analytics Teams** | Develop and maintain behavioral and anomaly detection models. |

| **Operations Command** | Coordinate tactical responses based on intelligence reports. |

| **Legal & Compliance** | Review intelligence methods for regulatory adherence. |

| **Personnel Security** | Conduct background checks, psychological assessments, and continuous vetting. |

---

## 6. **Operational Procedures**

### 6.1. **Data Collection and Surveillance**

* Implement surveillance technologies and HUMINT programs according to mission priorities.

* Regularly audit AI models and data sources for integrity and bias.

* Establish secure communication channels for intelligence sharing and reporting.

### 6.2. **Analysis and Fusion**

* Utilize AI and human analysts to correlate data across domains.

* Produce actionable intelligence reports and threat assessments.

* Conduct regular red team exercises simulating insider threats.

### 6.3. **Response and Mitigation**

* Activate rapid response protocols upon detection of credible threats.

* Coordinate with law enforcement, security, and allied agencies.

* Engage in counterintelligence operations including deception and asset control.

---

## 7. **Training and Awareness**

* Provide mandatory training for personnel on insider threat awareness and reporting.

* Conduct periodic workshops on intelligence tools, ethical standards, and data protection.

---

## 8. **Oversight and Accountability**

* Establish an independent oversight committee to review intelligence activities and incident responses.

* Implement mechanisms for whistleblower protection and anonymous reporting.

* Require periodic audits and compliance reports to senior leadership.

---

## 9. **Data Security and Retention**

* Protect all collected intelligence with appropriate cybersecurity measures.

* Retain data according to legal requirements and organizational policies.

* Dispose of intelligence materials securely when no longer needed.

---

## 10. **Review and Amendment**

* This policy shall be reviewed annually or after any significant incident.

* Amendments shall be proposed by the Chief Intelligence Officer and approved by \[Approving Authority].

---

**End of Policy**

0 notes

Text

What are power optimization techniques in embedded AI systems?

Power efficiency is a critical concern in embedded AI systems, particularly for battery-operated and resource-constrained devices. Optimizing power consumption ensures longer operational life, reduced heat dissipation, and improved overall efficiency. Several key techniques help achieve this optimization:

Dynamic Voltage and Frequency Scaling (DVFS): This technique adjusts the processor’s voltage and clock speed dynamically based on workload requirements. Lowering the frequency during idle or low-computation periods significantly reduces power consumption.

Efficient Hardware Design: Using low-power microcontrollers (MCUs), dedicated AI accelerators, and energy-efficient memory architectures minimizes power usage. AI-specific hardware, such as Edge TPUs and NPUs, improves performance while reducing energy demands.

Sleep and Low-Power Modes: Many embedded AI systems incorporate deep sleep, idle, or standby modes when not actively processing data. These modes significantly cut down power usage by shutting off unused components.

Model Quantization and Pruning: Reducing the precision of AI models (quantization) and eliminating unnecessary model parameters (pruning) lowers computational overhead, enabling energy-efficient AI inference on embedded systems.

Energy-Efficient Communication Protocols: For IoT-based embedded AI, using low-power wireless protocols like Bluetooth Low Energy (BLE), Zigbee, or LoRa helps reduce power consumption during data transmission.

Optimized Code and Algorithms: Writing power-efficient code, using optimized AI frameworks (e.g., TensorFlow Lite, TinyML), and reducing redundant computations lower energy demands in embedded AI applications.

Adaptive Sampling and Edge Processing: Instead of continuously transmitting all sensor data to the cloud, embedded AI systems perform on-device processing, reducing communication power consumption.

Mastering these power optimization techniques is crucial for engineers working on intelligent devices. Enrolling in an embedded system certification course can help professionals gain expertise in designing efficient, low-power AI-driven embedded solutions.

0 notes

Text

Edge computing is revolutionizing embedded systems by enabling real-time data processing without relying on the cloud. Instead of sending every piece of information to remote servers, smart devices now process data locally, leading to:

- Faster response times

- Enhanced security & privacy

- Lower bandwidth & cloud costs

- More reliable performance in remote areas

Where is Edge Computing Used?

- Smart Homes & IoT Devices

- Healthcare Wearables & Patient Monitoring

- Autonomous Vehicles & Robotics

- Industrial Automation & Predictive Maintenance

As AI, 5G, and IoT evolve, Edge Computing in Embedded Systems is shaping the future of technology. Are you ready to embrace it?

#EdgeComputing #EmbeddedSystems #SmartTech #IoT #AI #Innovation #TechTrends #FutureTech #DigitalTransformation

#software development#electronics#artificial intelligence#engineering#innovation#web design#hardware#embedded#digital marketing

0 notes

Text

Predictive Analytics in USA

Predictive analytics in the United States uses data, algorithms, and machine learning to forecast future outcomes, which improves decision-making across industries. AIvHub provides valuable insights and resources to help businesses use predictive analytics to improve efficiency, risk management, and strategic planning.

#aivhub#aiv consultant#Predictive Analytics#aiv#AIV Consultancy#Embedded Analytics#Business Intelligence

0 notes

Text

Redefine Customer Engagement with AI-Powered Application Solutions

In today’s digital landscape, customer engagement is more crucial than ever. ATCuality’s AI powered application redefine how businesses interact with their audience, creating personalized experiences that foster loyalty and drive satisfaction. Our applications utilize cutting-edge AI algorithms to analyze customer behavior, preferences, and trends, enabling your business to anticipate needs and respond proactively. Whether you're in e-commerce, finance, or customer service, our AI-powered applications can optimize your customer journey, automate responses, and provide insights that lead to improved service delivery. ATCuality’s commitment to innovation ensures that each AI-powered application is adaptable, scalable, and perfectly aligned with your brand’s voice, keeping your customers engaged and coming back for more.

#digital marketing#seo services#artificial intelligence#seo marketing#seo agency#seo company#iot applications#amazon web services#azure cloud services#ai powered application#android app development#mobile application development#app design#advertising#google ads#augmented and virtual reality market#augmented reality agency#augmented human c4 621#augmented reality#iot development services#iot solutions#iot development company#iot platform#embedded software#task management#cloud security services#cloud hosting in saudi arabia#cloud computing#sslcertificate#ssl

1 note

·

View note

Text

Advanced Machine Learning Techniques for IoT Sensors

As we explore the realm of advanced machine learning techniques for IoT sensors, it’s clear that the integration of sophisticated algorithms can transform the way we analyze and interpret data. We’ve seen how deep learning and ensemble methods offer powerful tools for pattern recognition and anomaly detection in the massive datasets generated by these devices. But what implications do these advancements hold for real-time monitoring and predictive maintenance? Let’s consider the potential benefits and the challenges that lie ahead in harnessing these technologies effectively.

Overview of Machine Learning in IoT

In today’s interconnected world, machine learning plays a crucial role in optimizing the performance of IoT devices. It enhances our data processing capabilities, allowing us to analyze vast amounts of information in real time. By leveraging machine learning algorithms, we can make informed decisions quickly, which is essential for maintaining operational efficiency.

These techniques also facilitate predictive analytics, helping us anticipate issues before they arise. Moreover, machine learning automates routine tasks, significantly reducing the need for human intervention. This automation streamlines processes and minimizes errors.

As we implement these advanced techniques, we notice that they continuously learn from data patterns, enabling us to improve our systems over time. Resource optimization is another critical aspect. We find that model optimization enhances the performance of lightweight devices, making them more efficient.

Anomaly Detection Techniques

Although we’re witnessing an unprecedented rise in IoT deployments, the challenge of detecting anomalies in these vast networks remains critical. Anomaly detection serves as a crucial line of defense against various threats, such as brute force attacks, SQL injection, and DDoS attacks. By identifying deviations from expected system behavior, we can enhance the security and reliability of IoT environments.

To effectively implement anomaly detection, we utilize Intrusion Detection Systems (IDS) that can be signature-based, anomaly-based, or stateful protocol. These systems require significant amounts of IoT data to establish normal behavior profiles, which is where advanced machine learning techniques come into play.

Machine Learning (ML) and Deep Learning (DL) algorithms help us analyze complex data relationships and detect anomalies by distinguishing normal from abnormal behavior. Forming comprehensive datasets is essential for training these algorithms, as they must simulate real-world conditions.

Datasets like IoT-23, DS2OS, and Bot-IoT provide a foundation for developing effective detection systems. By leveraging these advanced techniques, we can significantly improve our ability to safeguard IoT networks against emerging threats and vulnerabilities.

Supervised vs. Unsupervised Learning

Detecting anomalies in IoT environments often leads us to consider the types of machine learning approaches available, particularly supervised and unsupervised learning.

Supervised learning relies on labeled datasets to train algorithms, allowing us to categorize data or predict numerical outcomes. This method is excellent for tasks like spam detection or credit card fraud identification, where outcomes are well-defined.

On the other hand, unsupervised learning analyzes unlabeled data to uncover hidden patterns, making it ideal for anomaly detection and customer segmentation. It autonomously identifies relationships in data without needing predefined outcomes, which can be especially useful in real-time monitoring of IoT sensors.

Both approaches have their advantages and disadvantages. While supervised learning offers high accuracy, it can be time-consuming and requires expertise to label data.

Unsupervised learning can handle vast amounts of data and discover unknown patterns but may yield less transparent results.

Ultimately, our choice between these methods depends on the nature of our data and the specific goals we aim to achieve. Understanding these distinctions helps us implement effective machine learning strategies tailored to our IoT security needs.

Ensemble Methods for IoT Security

Leveraging ensemble methods enhances our approach to IoT security by combining multiple machine learning algorithms to improve predictive performance. These techniques allow us to tackle the growing complexity of intrusion detection systems (IDS) in interconnected devices. By utilizing methods like voting and stacking, we merge various models to achieve better accuracy, precision, and recall compared to single learning algorithms.

Recent studies show that ensemble methods can reach up to 99% accuracy in anomaly detection, significantly addressing issues related to imbalanced data. Moreover, incorporating robust feature selection methods, such as chi-square analysis, helps enhance IDS performance by identifying relevant features that contribute to accurate predictions.

The TON-IoT dataset, which includes realistic attack scenarios and regular traffic, serves as a reliable benchmark for testing our models. With credible datasets, we can ensure that our machine learning approaches are effective in real-world applications.

As we continue to refine these ensemble techniques, we must focus on overcoming challenges like rapid system training and computational efficiency, ensuring our IDS remain effective against evolving cyber threats. By embracing these strategies, we can significantly bolster IoT security and protect our interconnected environments.

Deep Learning Applications in IoT

Building on the effectiveness of ensemble methods in enhancing IoT security, we find that deep learning applications offer even greater potential for analyzing complex sensor data.

By leveraging neural networks, we can extract intricate patterns and insights from vast amounts of data generated by IoT devices. This helps us not only in identifying anomalies but also in predicting potential failures before they occur.

Here are some key areas where deep learning excels in IoT:

Anomaly Detection: Recognizing unusual patterns that may indicate security breaches or operational issues.

Predictive Maintenance: Anticipating equipment failures to reduce downtime and maintenance costs.

Image and Video Analysis: Enabling real-time surveillance and monitoring through advanced visual recognition techniques.

Natural Language Processing: Enhancing user interaction with IoT systems through voice commands and chatbots.

Energy Management: Optimizing energy consumption in smart homes and industrial setups, thereby improving sustainability.

Frequently Asked Questions

What Machine Learning ML Techniques Are Used in Iot Security?

We’re using various machine learning techniques for IoT security, including supervised and unsupervised learning, anomaly detection, and ensemble methods. These approaches help us identify threats and enhance the overall safety of interconnected devices together.

What Are Advanced Machine Learning Techniques?

We’re exploring advanced machine learning techniques, which include algorithms that enhance data analysis, facilitate pattern recognition, and improve predictive accuracy. These methods help us make better decisions and optimize various applications across different industries.

How Machine Learning Techniques Will Be Helpful for Iot Based Applications in Detail?

We believe machine learning techniques can transform IoT applications by enhancing data processing, improving security, predicting failures, and optimizing maintenance. These advancements not only boost efficiency but also protect our interconnected environments from potential threats.

How Machine Learning Techniques Will Be Helpful for Iot Based Applications in Detail?

We see machine learning techniques enhancing IoT applications by enabling predictive analytics, improving decision-making, and ensuring robust security. They help us identify unusual patterns, streamline operations, and optimize resource management effectively across various sectors.

Conclusion

In conclusion, by harnessing advanced machine learning techniques, we’re transforming how IoT sensors process and analyze data. These methods not only enhance our ability to detect anomalies but also empower us to make informed decisions in real-time. As we continue to explore supervised and unsupervised learning, along with ensemble and deep learning approaches, we’re paving the way for more efficient and secure IoT systems. Let’s embrace these innovations to unlock the full potential of our connected devices.

Sign up for free courses here.

Visit Zekatix for more information.

#courses#artificial intelligence#embedded systems#embeded#edtech company#online courses#academics#nanotechnology#robotics#zekatix

0 notes

Text

The Sequence Opinion #537: The Rise and Fall of Vector Databases in the AI Era

New Post has been published on https://thedigitalinsider.com/the-sequence-opinion-537-the-rise-and-fall-of-vector-databases-in-the-ai-era/

The Sequence Opinion #537: The Rise and Fall of Vector Databases in the AI Era

Once regarded as a super hot category, now its becoming increasingly commoditized.

Created Using GPT-4o

Hello readers, today we are going to discuss a really controversial thesis: how vector DBs become one of the most hyped trends in AI just to fall out of favor in a few months.

In this new gen AI era, few technologies have experienced a surge in interest and scrutiny quite like vector databases. Designed to store and retrieve high-dimensional vector embeddings—numerical representations of text, images, and other unstructured data—vector databases promised to underpin the next generation of intelligent applications. Their relevance soared following the release of ChatGPT in late 2022, when developers scrambled to build AI-native systems powered by retrieval-augmented generation (RAG) and semantic search.

This essay examines the meteoric rise and subsequent repositioning of vector databases. We delve into the emergence of open-source and commercial offerings, their technical strengths and limitations, and the influence of traditional database vendors entering the space. Finally, we contrast the trajectory of vector databases with the lasting success of the NoSQL movement to better understand why vector databases, despite their value, struggled to sustain their standalone identity.

The Emergence of Vector Databases

#2022#ai#applications#chatGPT#data#Database#databases#developers#embeddings#era#Experienced#gen ai#GPT#how#identity#images#intelligent applications#movement#One#OPINION#Other#RAG#search#Space#standalone#store#Success#text#Trends#unstructured data

0 notes

Text

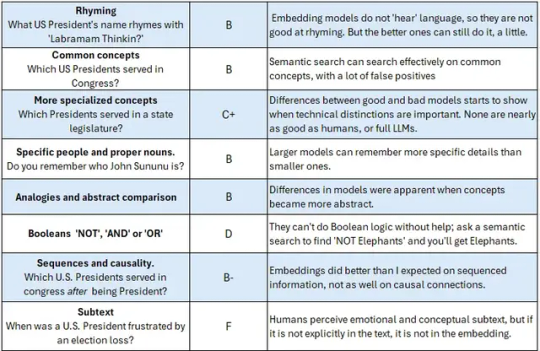

Embeddings Scorecard

0 notes

Text

AI is becoming a make-or-break factor for banks.

But success will not depend on their ability to offer #AI, but on their competence in integrating it. Let’s take a look.

Banking is forecasted to feel the biggest impact from generative AI among sectors and industries as a percentage of their revenues with the additional value calculated between $200 bn and $340 bn annually (source: McKinsey).

#ai#artificialintelligence#artificial intelligence#fintechstartups#fintech#fintechnews#embedded banking#digital banking#neobank

0 notes

Text

3 Ways Apple Intelligence And Embedded AI Will Change Daily Life

Apple Intelligence exemplifies the embedded character...see more

#appleinnovation#apple intelligence#embedded ai#embedded#ai#ai art#apple#iphone#technology#tech#artificial intelligence#phone#change#daily life

0 notes

Text

What are the applications of embedded systems?

Embedded systems are specialized computing systems designed to perform specific tasks or functions within a larger system. They combine hardware and software components to operate efficiently in real-time environments. Embedded systems are commonly used in various industries due to their reliability, compact size, and energy efficiency.

One of the most prominent applications of embedded systems is in the automotive industry. Modern vehicles rely on embedded systems for engine control, anti-lock braking systems (ABS), airbag deployment, and infotainment systems. These systems ensure safety, performance, and user comfort.

In consumer electronics, embedded systems power devices such as smartphones, smart TVs, and gaming consoles. They enable features like touch interfaces, connectivity, and seamless user experiences. Similarly, home automation devices like smart thermostats, security cameras, and robotic vacuum cleaners depend on embedded systems to function intelligently.

Healthcare is another domain where embedded systems play a crucial role. They are used in medical devices like pacemakers, blood pressure monitors, and diagnostic equipment to provide precise and real-time data for patient care.

In the industrial sector, embedded systems are integral to manufacturing processes. They control machinery, monitor production lines, and ensure operational efficiency in smart factories. Additionally, they are used in aerospace, telecommunications, and renewable energy systems for mission-critical tasks.

Embedded systems also enable IoT (Internet of Things) devices, connecting everyday objects to the internet for data collection and automation. Applications include smart cities, wearable technology, and environmental monitoring.

The versatility of embedded systems continues to expand as technology advances. For those interested in mastering this field, pursuing an embedded system certification course can open doors to numerous career opportunities in these dynamic industries.

#embedded systems#data analytics#machine learning#datascience#artificial intelligence#internetofthings#coding#embedded

0 notes